Products Overview

Accelerator IP for

AI Designs

AI Accelerator IP for Edge Devices

Edge AI designs require a careful balance of performance, power consumption, area, and latency. Customized for your specific use cases, Origin™ is the ideal NPU IP for optimizing application performance from the smallest edge nodes to smartphones to automobiles.

Optimized Architecture for Your Trained Networks

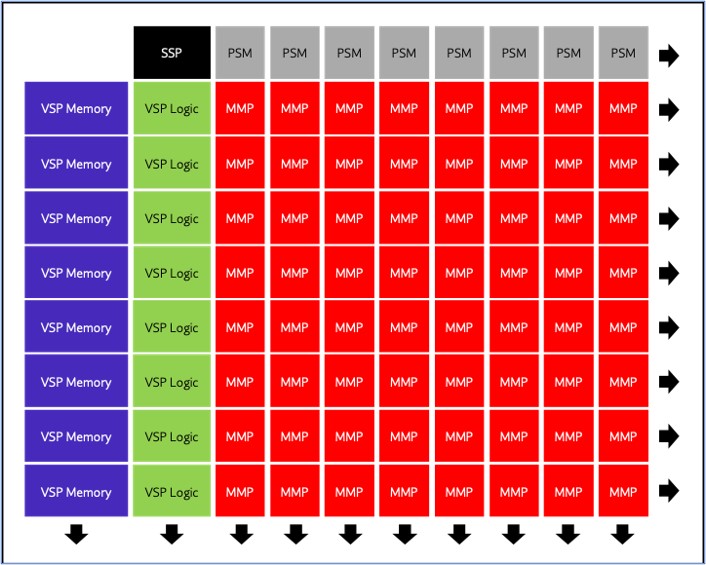

Origin delivers full accuracy and predictable performance without needing hardware-specific optimizations or changes to your trained model. Its patented packet-based execution architecture provides single-core performance up to 128 TOPS and sustained utilization rates of 70-90%, measured in silicon running common AI workloads such as ResNet. This best-in-class performance and utilization enables users to run AI models with less power consumption than alternative solutions.

Silicon-proven and third-party tested, Expedera’s architecture is extremely power-efficient, achieving 18 TOPS/W. Origin NPUs offer deterministic performance, the smallest memory footprint, and are fully scalable. This makes them perfect for edge solutions with little or no DRAM bandwidth or high-performance applications such as autonomous driving. The ASIL-B readiness-certified Origin product family offers the ideal solution for engineers looking for a single NPU architecture that easily runs RNN, CNN, LSTM, LLM, and other network types while maintaining optimal processing performance, power, and area.

Full TVM-Based Software Stack

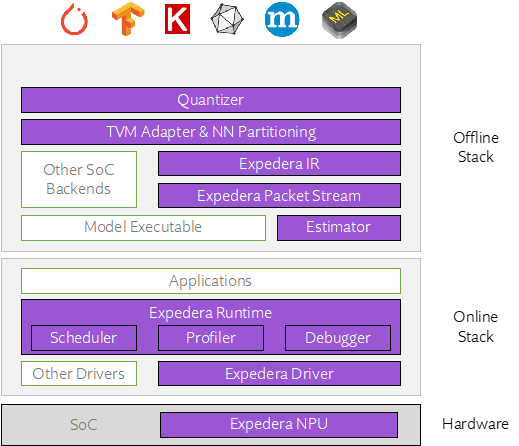

Expedera’s Origin IP platform includes a full TVM-based software stack that enables developers to work efficiently as they deploy their networks on the target hardware. The software stack provides a choice of two flows – an offline compilation flow based on the TVM compiler and an interpreted flow for on-device or online use. TVM enables a strategy of writing once and deploying everywhere. It supports all popular frameworks, including TensorFlow, TensorFlow Lite, PyTorch, and ONNX, allowing developers to continue working with their favorite frameworks.

The software stack automatically handles tasks such as packetization and debugging while providing user-friendly options such as mixed precision quantization—using your tools or ours—custom layer and custom network support, and multi-job APIs.

Origin Line of Products

Origin E1

The Origin E1 processing cores are individually optimized for a subset of neural networks commonly used in home appliances, edge nodes, and other small consumer devices. The E1 LittleNPU supports always-sensing cameras found in smartphones, smart doorbells, and security cameras.

Origin E2

The Origin E2 is designed for power-sensitive on-chip applications that require no off-chip memory. It is suitable for low power applications such as mobile phones and edge nodes, and like all Expedera NPUs, is tunable to specific workloads.

Origin E6

Origin E6, optimized to balance power and performance, utilizes SoC cache or DRAM access during runtime and supports advanced system memory management. Supporting multiple jobs, the E6 runs a wide range of AI models in smartphones, tablets, edge servers, and others.

Origin E8

Origin E8 is designed for high-performance applications required by autonomous vehicles/ADAS and datacenters. It offers superior TOPS performance while dramatically reducing DRAM requirements and system BOM costs, as well as enabling multi-job support. Even at 128 TOPS, with its low power consumption, the Origin E8 is a good fit for deployments in passive cooling environments.

TimbreAI T3

TimbreAI T3 is an ultra-low power Artificial Intelligence (AI) inference engine designed for noise reduction uses cases in power-constrained devices such as headsets. TimbreAI requires no external memory access, saving system power while increasing performance and reducing chip size.

Download our White Papers