Despite significant improvements in throughput, edge AI accelerators (Neural Processing Units, or NPUs) are still often underutilized. Inefficient management of weights and activations leads to fewer available cores utilized for multiply-accumulate (MAC) operations. Edge AI…

Read More

A Buyers Guide to an NPU

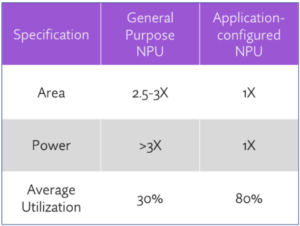

Choosing the right inference NPU (Neural Processing Unit) is a critical decision for a chip architect. There’s a lot at stake because the AI landscape constantly changes, and the choices will impact overall product cost,…

Read More

When Little is Better…Introducing the Always-Sensing LittleNPU

At the recent Embedded Vision Summit, Expedera chief scientist and co-founder Sharad Chole detailed LittleNPU, our new AI processing approach for always-sensing smartphones, security cameras, doorbells, and other consumer devices. Always-sensing cameras persistently sample and…

Read More

Can Compute-In-Memory Bring New Benefits To Artificial Intelligence Inference?

Compute-in-memory (CIM) is not necessarily an Artificial Intelligence (AI) solution; rather, it is a memory management solution. CIM could bring advantages to AI processing by speeding up the multiplication operation at the heart of AI…

Read More

Sometimes Less is More—Introducing the New Origin E1 Edge AI Processor

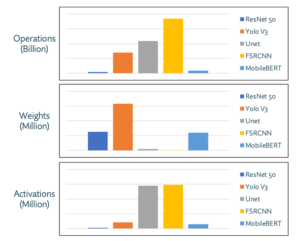

All neural networks have similar components, including neurons, synapses, weights, biases, and functions. But each network has unique requirements based on the number of operations, weights, and activations that must be processed. This is apparent…

Read More